ICRA 2021 -- Learning World Transition Model for Socially Aware Robot Navigation

Abstract

- Moving in dynamic pedestrian environments is one of the important requirements for autonomous mobile robots. We present a model-based reinforcement learning approach for robots to navigate through crowded environments. The navigation policy is trained with both real interaction data from multi-agent simulation and virtual data from a deep transition model that predicts the evolution of surrounding dynamics of mobile robots. A reward function considering social conventions is designed to guide the training of the policy. Specifically, the policy model takes laser scan sequence and robot’s own state as input and outputs steering command. The laser sequence is further transformed into stacked local obstacle maps disentangled from robot’s ego motion to separate the static and dynamic obstacles, simplifying the model training. We observe that the policy using our method can be trained with significantly less real interaction data in simulator but achieve similar level of success rate in social navigation tasks compared with other methods. Experiments are conducted in multiple social scenarios both in simulation and on real robots, the learned policy can guide the robots to the final targets successfully in a socially compliant manner.

|

Comparison

|

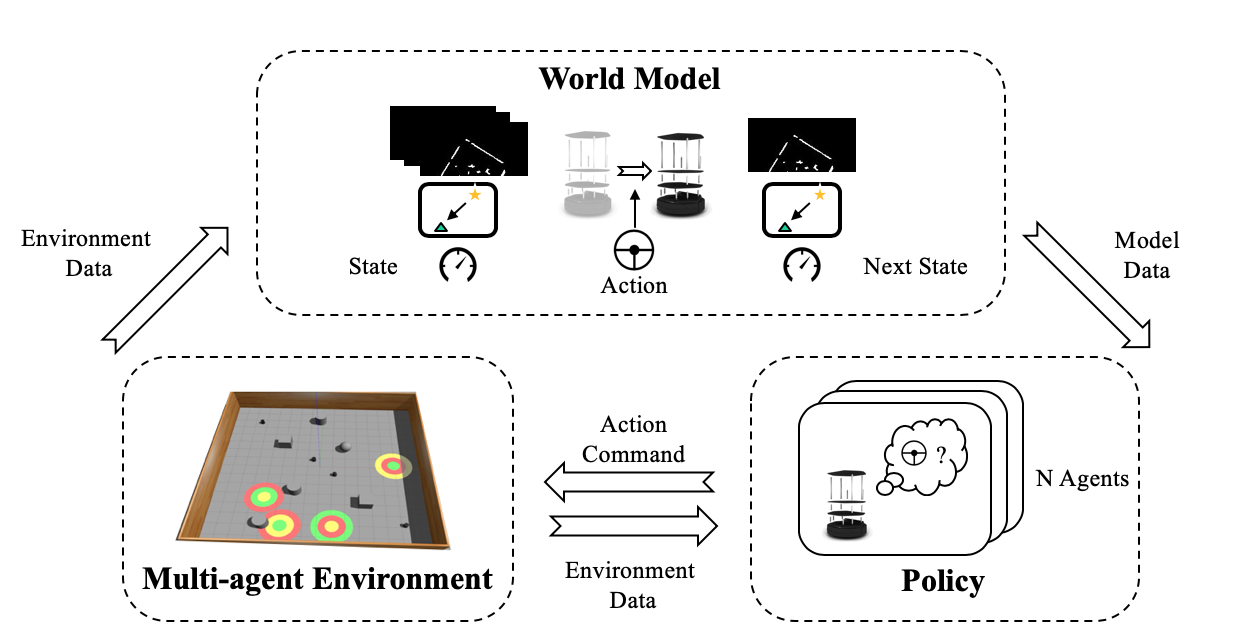

Framework

|

Transition Model

|

Policy Model

prediction_list prediction_list |

prediction_ours prediction_ours |

prediction_label prediction_label |

prediction_list_2 prediction_list_2 |

prediction_ours_2 prediction_ours_2 |

prediction_label_2 prediction_label_2 |

Prediction

Training Curves Training Curves |

Real Robot Experiments Real Robot Experiments |

Experiments